2023-01-27

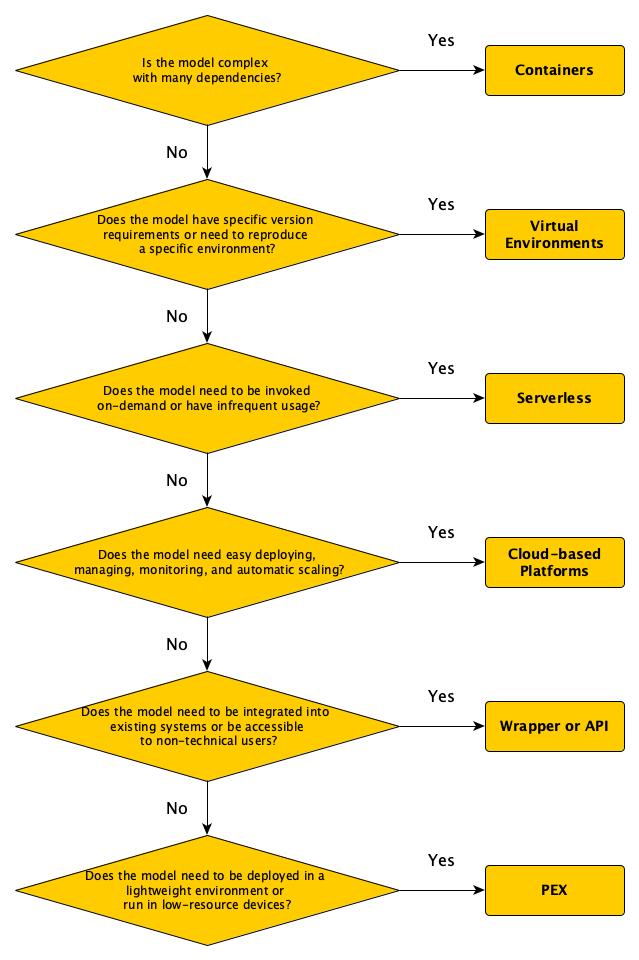

Which Method of Model Packaging Should I Used?

There are several alternative methods of packaging ML models, and the appropriate method will depend on the specific use case and requirements. Some alternative methods include:

Complex models that have many dependencies, or for models that need to run on specific operating systems -> Containers

Containers, such as Docker, provide a way to package and distribute the entire runtime environment for a model, including the operating system, libraries, and dependencies. This can be useful for complex models that have many dependencies, or for models that need to run on specific operating systems.

Models that have specific version requirements, or for reproducing a specific environment -> Virtual environments

Virtual environments, such as conda, provide a way to create isolated Python environments that include specific versions of libraries and dependencies. This can be useful for models that have specific version requirements, or for reproducing a specific environment.

Models that need to be invoked on-demand or for models that have infrequent usage -> Serverless

Serverless computing, such as AWS Lambda or Google Cloud Functions, allows you to run code without provisioning or managing servers. This can be useful for models that need to be invoked on-demand or for models that have infrequent usage.

Models that need to be deployed in a lightweight environment, or for models that need to run in low-resource devices -> PEX

PEX (Python Executable) is a way to package a Python application and all its dependencies into a single file, which can be run without a separate Python installation. This can be useful for models that need to be deployed in a lightweight environment, or for models that need to run in low-resource devices.

Easy deploying, managing, and monitoring models, automatic scaling -> Cloud-based Platforms

Some cloud-based platforms like AWS SageMaker, Google Cloud ML Engine, and Azure Machine Learning Studio provide easy to use environment for deploying, managing, and monitoring ML models. They also provide automatic scaling, automatic patching, and other benefits.

Models that need to be integrated into existing systems -> Wrapper or API

Creating a wrapper or API around the model, which can be used to interact with the model via a web interface or an API. This can be useful for models that need to be integrated into existing systems or for making the model accessible to non-technical users.

Decision algorithm

Ultimately, the choice of packaging method will depend on the specific requirements of the model, the target audience, and the resources available. It's worth considering the trade-offs between different options and choosing the one that best fits your use case.

Tags:

machine-learning