2023-01-21

MLOps Scorecard - How Advanced Is Your Organization in Implementing MLOps Processes?

Use proposed scorecard to assess how advanced is your organization in implementing MLOps processes.

In this article I propose a sample MLOps scorecard that organizations can use to assess their current level of MLOps implementation. All what's needed is to count score (0-100) in each of the 6 layers of introducing MLOps practices:

Analyze organization along 6 dimensions

1. Governance

- [ ] Does the organization have a clear ML strategy and governance structure in place?

- [ ] Are there policies and procedures for data management, model development, and deployment?

- [ ] Is there a designated team responsible for MLOps?

Additional ideas for the questions in this category:

- Do we have a clear and well-defined governance structure for our ML projects?

- Are there clearly defined roles and responsibilities for ML project team members?

- Do we have a process in place for managing and controlling access to data used in ML projects?

- Are there policies and procedures in place to ensure that ML models are developed and deployed ethically?

- Do we have a process in place for regularly reviewing and updating our ML governance framework?

2. Data Management

- [ ] Is there a centralized repository for storing and managing data?

- [ ] Is data preprocessing and feature engineering automated?

- [ ] Are there mechanisms in place for data quality control and validation?

Additional ideas for the questions in this category:

- Do we have a process in place for collecting, storing, and maintaining the integrity of data used in ML projects?

- Are there clear data quality standards and procedures in place to ensure that data used in ML projects is fit for purpose?

- Do we have a process in place for versioning and tracking changes to data used in ML projects?

- Are there procedures in place to ensure that data used in ML projects is kept secure and protected against unauthorized access?

- Do we have a process in place for monitoring data usage and ensuring compliance with relevant regulations and laws?

3. Model Development

- [ ] Is there a standard development process for creating and testing ML models?

- [ ] Are there tools and frameworks in place to facilitate collaboration and version control?

- [ ] Are there mechanisms in place to track the performance of models over time?

Additional ideas for the questions in this category:

- Are there clear standards and guidelines in place for developing, testing, and evaluating models?

- Is there a process in place for version control and reproducibility of models?

- Are there testing and evaluation procedures in place to ensure the model's performance and accuracy?

- Are there processes in place for monitoring and addressing bias in the models?

- Is there a process in place for continuous improvement and updating of models in response to new data and changing requirements?

4. Deployment

- [ ] Are models deployed in a consistent and repeatable manner?

- [ ] Are there mechanisms in place for monitoring and maintaining deployed models?

- [ ] Are there processes for rolling out updates to models?

Additional ideas for the questions in this category:

- Is there a process in place for deploying models to production systems?

- Are there procedures in place for monitoring and maintaining deployed models?

- Is there a process in place for rolling out updates and new versions of models?

- Are there procedures in place for monitoring and addressing model performance in production?

- Are there processes in place for managing and securing the data and resources used by deployed models?

5. Monitoring and Evaluation

- [ ] Are there metrics in place to measure the performance of models in production?

- [ ] Are there mechanisms for capturing and analyzing feedback from users?

- [ ] Are there processes in place for conducting regular audits and evaluations of ML systems?

Additional ideas for the questions in this category:

- Is there a process in place for monitoring model performance and accuracy in production?

- Are there procedures in place for evaluating model performance against business objectives and goals?

- Are there processes in place for identifying and addressing any issues or errors with deployed models?

- Are there procedures in place for collecting and analyzing feedback on deployed models from end-users?

- Are there processes in place for implementing and evaluating changes to improve model performance over time?

6. Continuous Improvement

- [ ] Are there regular reviews of the MLOps process in place?

- [ ] Are there processes for continuous improvement and experimentation?

- [ ] Is there a culture of learning and adaptation in the organization?

Additional ideas for the questions in this category:

- Is there a process in place for regularly reviewing and improving deployed models?

- Are there procedures in place for incorporating feedback and new data into model development and deployment?

- Is there a process in place for regularly retraining and updating models?

- Are there procedures in place for monitoring and addressing shifts in data distribution and model drift?

- Are there processes in place for conducting impact analysis and A/B testing of new models or updates?

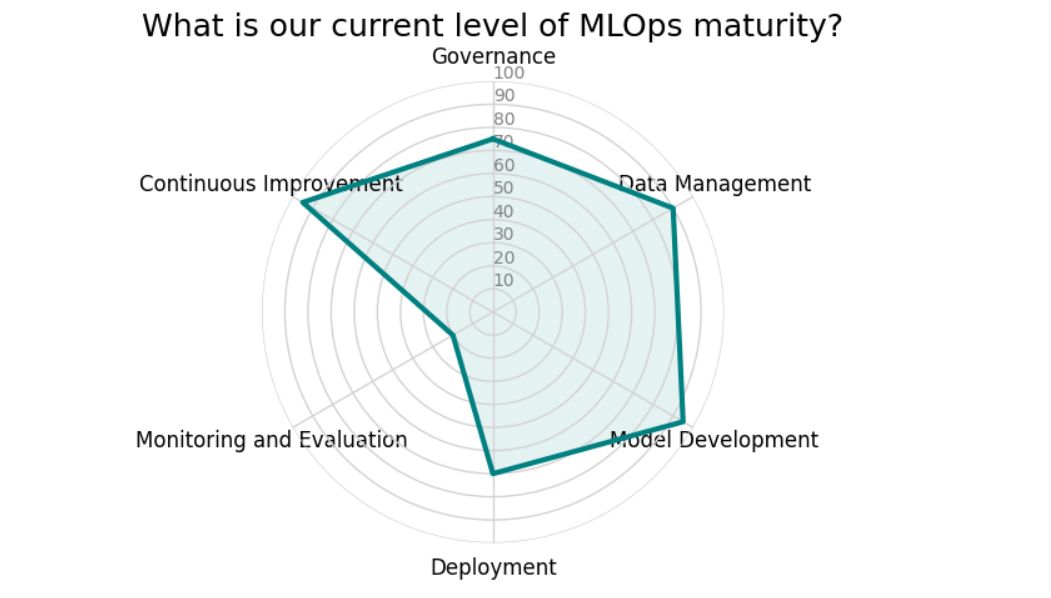

Visualize it

Finally, you can use radar plot to present the results visually, as example see the radar chart below:

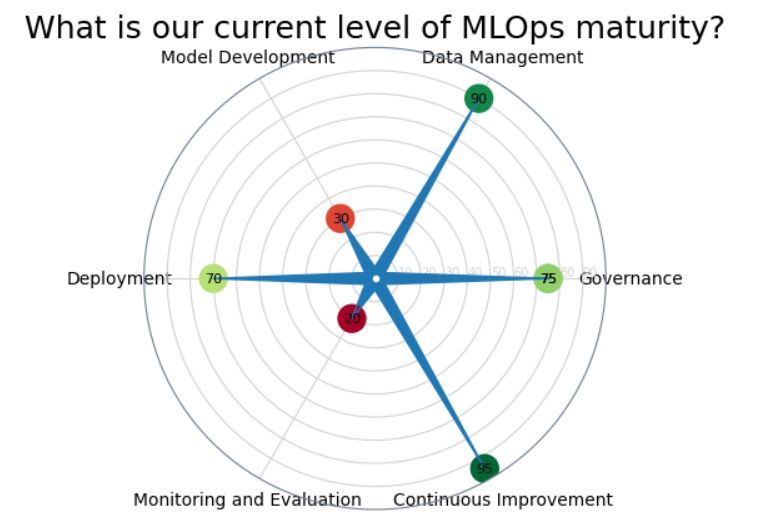

or similar Stellar plot as below:

You can use other type of chart that suit your needs to present the results in a visual manner.

Limitations that you should be aware of

There are a few potential heads-up to keep in mind when using this kind of evaluation for measuring the maturity of an organization's MLOps processes.

Factors limiting potential utility of such analysis

1. Subjectivity

The evaluation process may be subject to personal biases and interpretations, making it difficult to obtain consistent and accurate results.

2. Lack of context

The evaluation process may not take into account the specific context and constraints of the organization, leading to results that may not be relevant or applicable.

3. Limited scope

The evaluation process may only assess a limited number of aspects of MLOps processes, leading to an incomplete understanding of the organization's MLOps maturity.

4. Limited flexibility

The evaluation process may be limited in its ability to adapt to changing conditions and requirements, making it difficult to maintain its relevance over time.

5. Limited actionability

The results of the evaluation process may not be actionable, meaning that they may not provide clear guidance on how to improve the organization's MLOps processes.

Hints for overcoming limitations

In order to overcome these limitations, it's advisable to:

- Ensure that the evaluation process is carried out by a diverse group of individuals with relevant expertise, to reduce the influence of personal biases.

- Tailor the evaluation process to the specific context and constraints of the organization.

- Continuously update the evaluation process to reflect new trends, best practices, and regulations.

- Use the evaluation results to develop a clear plan for improving the organization's MLOps processes and track progress over time.

- Consider using a combination of quantitative and qualitative methods for evaluating MLOps maturity, in order to have a more comprehensive understanding.

To have more reliable insights in progress of introducing MLOps try to use this method in conjunction with other methods such as interviews, surveys, and data analysis.

Any comments or suggestions? Let me know.

To cite this article:

@article{Saf2023MLOps,

author = {Krystian Safjan},

title = {MLOps Scorecard - How Advanced Is Your Organization in Implementing MLOps Processes?},

journal = {Krystian's Safjan Blog},

year = {2023},

}